In a rapidly evolving technological world, AI-based personal chatbots have become indispensable assistants in our daily lives. From scheduling appointments to explaining complex topics in simple terms, these digital assistants have transformed the way we interact with technology. But have you ever wondered about the secret behind their ability to understand and respond to our needs? The answer lies in a fascinating mechanism called “embedding.” In this blog post, we will explore the concept of embedding and how it enhances the personalization capabilities of chatbots.

What is Embedding?

In its simplest form, embedding is a technique used in natural language processing (NLP) to convert words and phrases into numerical vectors. These numerical representations captur the semantic meaning of the text, allowing the chatbot to generate human-like responses. You can think of it as translating human language into the language of machines.

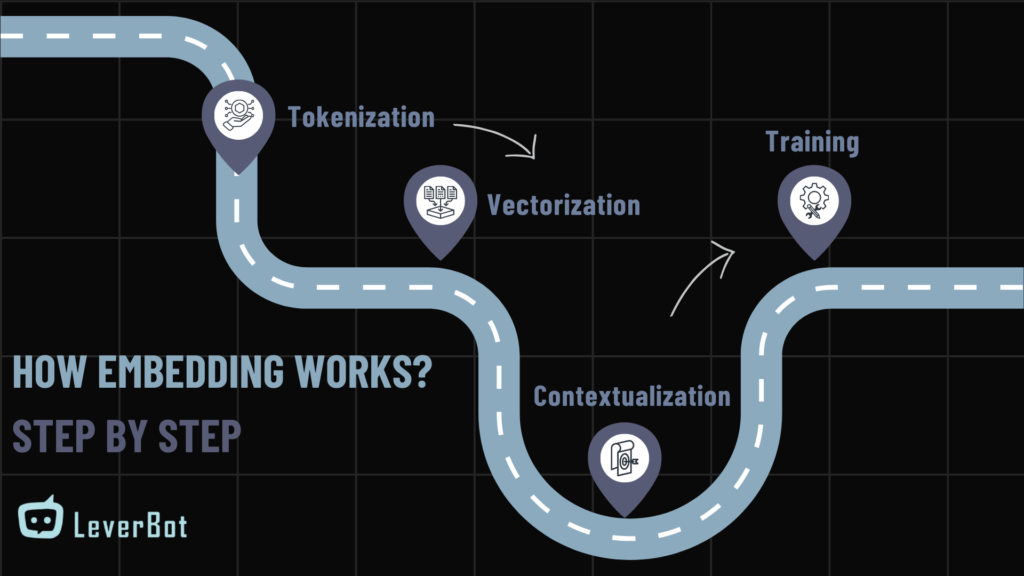

How Embedding Works?

1.Tokenization: The first step in the embedding process is tokenization, where the text is broken down into smaller units called tokens. These tokens can be words, subwords, or even characters, depending on the complexity of the language model.

2.Vectorization: Once the text is tokenized, each token is converted into a numerical vector. This vector is a multi-dimensional array of numbers that represents the token’s meaning in a way that the machine can understand. The dimensions of these vectors can range from a few dozen to several hundred, depending on the model’s complexity.

3.Contextualization: Modern embedding techniques like Word2Vec, GloVe, and BERT go a step further by considering the context in which a word appears. For example, the word “bank” can mean a financial institution or the side of a river. Contextual embeddings ensure that the correct meaning is captured based on the surrounding words.

4.Training: The vectors are generated by training the model on vast amounts of text data. During this training process, the model learns the relationships and patterns between words, allowing it to generate meaningful embeddings.

The Role of Embedding in Chatbot Personalization

Now that we understand the basics of embedding, let’s explore how it contributes to the personalization capabilities of chatbots.

Understanding User Intent

One of the most crucial features of a chatbot is its ability to understand user intent. Embeddings allow the chatbot to grasp the subtleties and nuances of human language. By converting user inputs into numerical vectors, the chatbot can analyze the semantic content and context, ensuring accurate interpretation of user requests.

For instance, when a user types, “Can you schedule a meeting with Dr. Smith for next Monday?” the chatbot can break down the text, understand the intent (scheduling a meeting), and extract relevant details (Dr. Smith, next Monday) to carry out the task.

Generating Human-like Responses

Embeddings play a critical role in enabling chatbots to generate coherent and contextually appropriate responses. By leveraging pre-trained language models like GPT-4, chatbots can produce text that closely mimics human conversation. The embeddings help the chatbot understand the context of the conversation, allowing it to generate responses that are not only relevant but also engaging.

Personalizing User Experience

Personalization is a key factor in enhancing user experience, and embeddings play a significant role in achieving this. Chatbots can be trained on user-specific data to create personalized embeddings. These embeddings capture user preferences, habits, and past interactions, enabling the chatbot to tailor its responses and recommendations accordingly.

For example, if a user frequently asks for weather updates, the chatbot may prioritize providing weather information as soon as the user initiates a conversation. This level of personalization makes interactions more efficient and satisfying for the user.

Handling Multilingual Conversations

In our increasingly globalized world, multilingual capabilities are essential for any chatbot. Embeddings facilitate seamless language translation and understanding. Advanced models like BERT and GPT-4 support multilingual embeddings, allowing chatbots to comprehend and respond to queries in multiple languages.

For instance, if a user asks, “¿Cuál es el pronóstico del tiempo para mañana?” the chatbot can understand the query in Spanish, convert it into embeddings, and provide an accurate weather forecast in the same language.

Challenges and Obstacles

While embeddings have significantly advanced the capabilities of chatbots, there are still challenges to overcome. One of the primary concerns is bias in the training data, which can lead to biased embeddings and, consequently, biased chatbot responses. Researchers are actively working on techniques to identify and mitigate these biases to ensure fair and unbiased interactions.

Another challenge is the computational resources required to train and deploy advanced embedding models. As these models become more complex, they demand more processing power and memory, which can be a barrier for smaller organizations.

Conclusion

Embeddings are the backbone of modern chatbot technology, enabling these digital assistants to understand, interpret, and respond to human language with remarkable accuracy. By converting text into numerical vectors, embeddings capture the semantic meaning and context, allowing chatbots to generate human-like and personalized responses. As we continue to advance in the field of natural language processing, embeddings will play an increasingly vital role in shaping the future of AI-based personal chatbots like Leverbot. Whether it’s scheduling appointments, providing information, or engaging in meaningful conversations, the magic behind all these fascinating interactions lies in embeddings.